AWS, Azure

Why Do I need a Data Lake?

A Data Lake is an enterprise-wide system for storing and analyzing disparate sources of data in their native formats. A Data Lake might combine sensor data, social media data, click-streams, location data, log files, and much more with traditional data from existing RDBMSes. The goal is to break the information silos in an enterprise by bringing all the data into a single place for analysis without the restrictions of schema, security, or authorization. Data Lakes are designed to store vast amounts of data, even petabytes, in local or cloud-based clusters consisting of commodity hardware.

In a more technical sense, a Data Lake is a set of tools for ingesting, transforming, storing, securing, recovering, accessing, and analyzing all the relevant data of a company. Which set of tools depends in some part on the specific needs and requirements of your business.

Designing your Data Lake

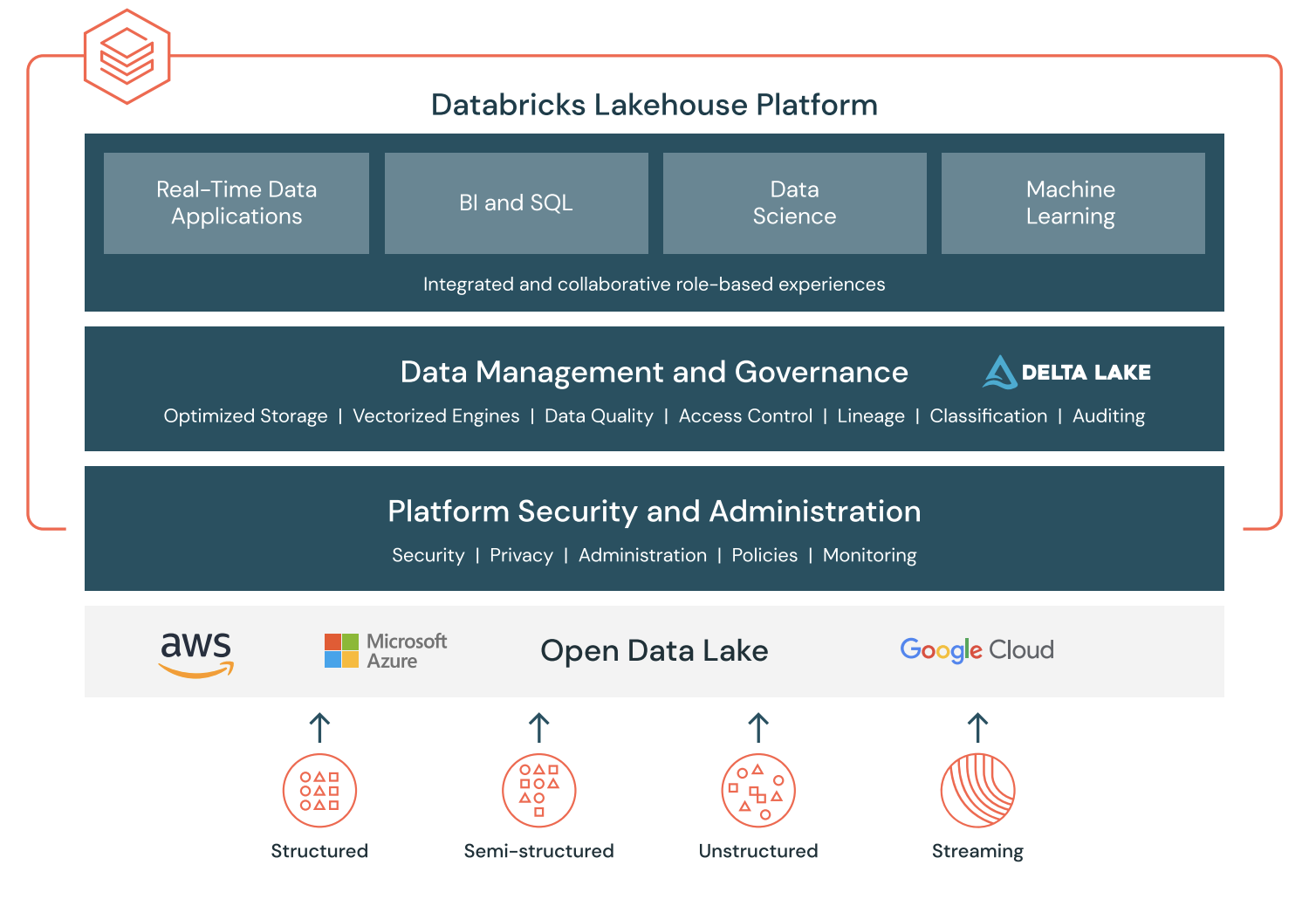

The choice of technologies to be installed will vary depending on the use cases and the streams that need to be processed. Additional considerations could be whether to host a solution internally or to rely on companies providing platforms as a service, like Amazon Web Services (AWS) or Microsoft Azure.

Use cases can be broadly categorized into different streams of processing like batch, streaming, and queries with fast read/write times. An appropriate tool set can be configured depending on the kind of processing that needs to take place.

Building the data lake

Given the advantages of a central Data Lake, there are some measures and utility challenges within the building process that every enterprise should address. The most important include data management and security for sensitive data. However, there are Hadoop ecosystem tools available on top of the core frameworks that make Data Lakes an enterprise-ready solution. Taking into account these common challenges, we can reclassify a Data Lake as an Enterprise Data Warehouse that does not require you to predefine metadata or define a specific schema before ingesting data into the system.

Use Cases For a Data Lake

There are a variety of ways you can use a Data Lake:

- Ingestion of semi-structured and unstructured data sources (aka big data) such as equipment readings, telemetry data, logs, streaming data, and so forth. A Data Lake is a great solution for storing IoT (Internet of Things) type of data which has traditionally been more difficult to store, and can support near real-time analysis. Optionally, you can also add structured data (i.e., extracted from a relational data source) to a Data Lake if your objective is a single repository of all data to be available via the lake.

- Advanced analytics support. A Data Lake is useful for data scientists and analysts to provision and experiment with data.

- Archival and historical data storage. Sometimes data is used infrequently, but does need to be available for analysis. A Data Lake strategy can be very valuable to support an active archive strategy.

- Preparation for data warehousing. Using a Data Lake as a staging area of a data warehouse is one way to utilize the lake, particularly if you are getting started.

- Augment a data warehouse. A Data Lake may contain data that isn't easily stored in a data warehouse, or isn't queried frequently. The Data Lake might be accessed via federated queries which make its separation from the DW transparent to end users via a data virtualization layer.

- Distributed processing capabilities associated with a logical data warehouse.

- Storage of all organizational data to support downstream reporting & analysis activities. Some organizations wish to achieve a single storage repository for all types of data. Frequently, the goal is to store as much data as possible to support any type of analysis that might yield valuable findings.

- Application support. In addition to analysis by people, a data lake can be a data source for a front-end application. The data lake might also act as a publisher for a downstream application (though ingestion of data into the data lake for purposes of analytics remains the most frequently cited use).

Key Benefits of a Data Lake

Manage Unlimited Data

A Data Lake can store unlimited data, in its original formats and fidelity, for as long as you need. As a data staging area, it can not only prepare data quickly and costeffectively for use in downstream systems, but also serves as an automatic compliance archive to satisfy internal and external regulatory demands. Unlike traditional archival storage solutions, a Data Lake is an online system: all data is available for query.

Accelerate Data Preparation and Reduce Costs

Increasingly, data processing workloads that previously had to run on expensive systems can migrate to a Data Lake, where they run at very low cost, in parallel, much faster than before. Optimizing the placement of these workloads and the data on which they operate frees capacity on high-end data warehouses, making them more valuable by allowing them to concentrate on the business-critical OLAP and other applications for which they were designed.

Explore and Analyze

Fast Above all else, a Data Lake enables analytic agility. IT can provide analysts and data scientists with a self-service environment to ask new questions and rapidly integrate, combine, and explore any data they need. Structure can be applied incrementally, at the right time, rather than necessarily up front. Not limited to standard SQL, a Data Lake offers options for full-text search, machine learning, scripting, and connectivity to existing business intelligence, data discovery, and analytic platforms. A Data Lake finally makes it cost-effective to run data-driven experiments and analysis over unlimited data.

Build Data Applications

Once developed and tested, analytical models can be instantly deployed at web scale to create real-time data-driven applications. Naturally, these applications themselves generate rich usage data that can be used to better understand user behavior and optimize the models. Creating automated feedback loops is easy in a Data Lake because the architecture provides both analytical and operational capabilities; instrumentation data simply lands right back in the Lake where, as with other data, it’s immediately available for human or machine analysis.

Example Retail Use Case

For example, a large U.S. home improvement retailer’s data about 100 million customer interactions per year was stored across isolated silos, preventing the company from correlating transactional data with various marketing campaigns and online customer browsing behavior. What this large retailer needed was a “golden record” that unified customer data across all time periods and across all channels, including point-of-sale transactions, home delivery and website traffic.

By making the golden record a reality, the Data Lake delivers key insights for highly targeted marketing campaigns including customized coupons, promotions and emails. And by supporting multiple access methods (batch, real-time, streaming, in-memory, etc.) to a common data set, it enables users to transform and view data in multiple ways (across various schemas) and deploy closed-loop analytics applications that bring time-to-insight closer to real time than ever before.

Conclusion

In a practical sense, a Data Lake is characterized by three key attributes

Collect everything. A Data Lake contains all data, both raw sources over extended periods of time as well as any processed data.

Dive in anywhere. A Data Lake enables users across multiple business units to refine, explore and enrich data on their terms.

Flexible access. A Data Lake enables multiple data access patterns across a shared infrastructure: batch, interactive, online, search, in-memory and other processing engines.

Matthias Vallaey

Matthias is founder of Big Industries and a Big Data Evangelist. He has a strong track record in the IT-Services and Software Industry, working across many verticals. He is highly skilled at developing account relationships by bringing innovative solutions that exceeds customer expectations. In his role as Entrepreneur he is building partnerships with Big Data Vendors and introduces their technology where they bring most value.