Big Industries Academy

Workshop Operationalizing the Machine Learning Pipeline

Data Scientists and ML Practitioners need more than a Jupyter notebook to build, test, and deploy ML models into production. They also need to ensure they perform these tasks in a reliable, flexible, and automated fashion.

There are three basic questions that should be considered when starting the ML journey to develop a model for a real Business Case:

- How long would it take your organization to deploy a change that involves a single line of code?

- How do you account for model concept drift in production

- Can you build, test and deploy models in a repeatable, reliable and automated way?

So, if you’re not happy with your answers to these questions, then MLOps is a concept that can help you to:

- Create or improve the organization culture and agility for Continuous Integration/Continuous Delivery (CI/CD).

- Create an automated infrastructure that will support your processes to operationalize your ML model.

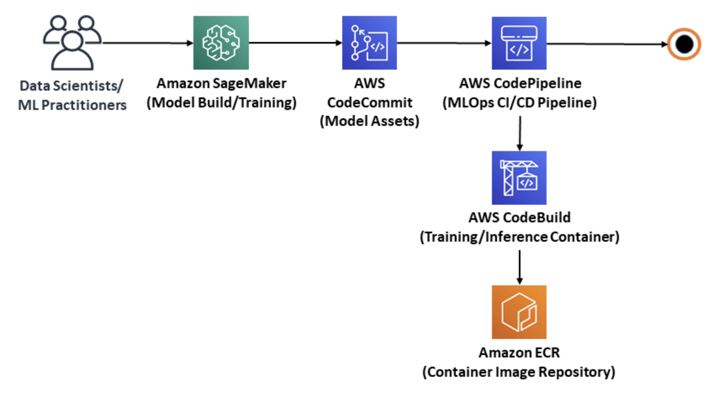

In this workshop you will build an MLOps pipeline that leverages AWS Sagemaker, a service that supports the an entire pipeline for ML model development, and is the heart of this solution. Around it, you will add different AWS DevOps tools and services to create an automated CI/CD pipeline for the ML model. This pipeline will prepare the data, build your docker images, train and test the ML model, and then integrate the model into a production workload.

The workshop is comprised of four primary modules that will, from the perspective of the ML Practitioner, focus on creating and executing the MLOps CI/CD Pipeline:

Configure the MLOps CI/CD Pipeline Source and Data Repositories

- The ML Practitioner creates a repository in AWS CodeCommit to store model source code.

- Additionally, they create repositories to store Model Training and Inference images.

- The ML Practitioner will use Amazon S3 to configure raw (unprocessed) training data as well as pre-processed (transformed) training data.

Configuring the MLOps CI/CD Pipeline Assets

- The ML Practitioner uses AWS CodeCommit and AWS CodeBuild to store the pipeline automation components or assets.

- The ML Practitioner also performs any local ML model testing to ensure these assets will be functional, before they are deployed by the pipeline.

Building and Executing the MLOps CI/CD Pipeline

- Since the components are codified, the ML Practitioner deploys the infrastructure as code, using AWS CloudFormation.

- Then, the ML Practitioner trains the ML model automatically using Amazon SageMaker, as a function of the pipeline.

Managing the MLOps CI/CD Pipeline Deployment

- The trained model is then deployed into a staging environment, DEV - QA/Development (simple endpoint), where it is tested to not only ensure that the deployed infrastructure is functional, but also that the model meets the business use case requirements in production. The testing procedure is automated to further optimize the pipeline flow.

- After the model is tested, it is deployed into production, PRD - Production (HA/Elastic endpoint). Here the ML Practitioner tests that the production environment can scale, and monitors the the model to ensure that it is maintaining a predetermined desired level of performance, or consistent model quality.

Matthias Vallaey

Matthias is founder of Big Industries and a Big Data Evangelist. He has a strong track record in the IT-Services and Software Industry, working across many verticals. He is highly skilled at developing account relationships by bringing innovative solutions that exceeds customer expectations. In his role as Entrepreneur he is building partnerships with Big Data Vendors and introduces their technology where they bring most value.